Oh, the Pain! iSCSI Boot with Windows Server

OK, tongue in cheek as it is not all bad. I must say I am a big fan of 'Boot From SAN' as it aligns to the desired end state of decoupling operational state from the physical world. An important attribute of a software defined, automated world. An over simplification I know, as there are a lot more to it then just the boot process externalisation. Anyway back to the problem in hand.

I recently had a reach out to see if I could help with a customer issue that had been raised. The customer was running Windows Server in an iSCSI boot configuration, but they were not seeing all paths once the server booted into Windows. A simple enough problem statement:

"When we iSCSI boot, we can only see 2 of an expected 4 paths between the host and the array"

Simple enough problem so as a first step, I looked to recreate the configuration within the local lab. This would allow me to determine if the path condition was as expected or something that may be aligned to an issue within the environment. Thankfully the test lab environment was very similar to the clients which comprised the following:

- Compute:

- Cisco UCS Blade Server (B200M4)

- Adapter: UCS VIC 1340

- 4 vNICS configured, 2 for general traffic and 2 for iSCSI.

- UCS Firmware 3.1(3c)

- Windows Server 2016

- Storage:

- Pure M20 FlashArray

- Purity 4.10.6

- Dual 10Gb interfaces in each controller assigned to iSCSI traffic (ETH0, ETH1). In total 4 iSCSI interfaces (CT0.ETH0, CT0.ETH1, CT1.ETH0, CT1.ETH1) are configured

- Network:

- Dual Cisco Nexus 5672UP Switches

- Each controller has an iSCSI interface connected to each of the switches

To be able to leverage all four iSCSI interfaces we need to ensure we have visibility between the two iSCSI NICs configured in the UCS Service Profile and the four array iSCSI NICs. Within the lab setup this was done by configuring two static iSCSI target interfaces per iSCSI vNIC (two static targets is the maximum per vNIC). The target objective of this is to ensure that there is no single point of failure at the Network Switch or Storage Array Controller level. The diagram below shows how the connectivity is laid out.

.

.

As it demonstrates, from the iSCSI hardware initiator configuration, 4 paths are expected to be accessible. We could actually see this to be behaving as expected when powering on the Service Profile where we could see from within the Array, that all four paths were active on the initial boot.

A barebones setup though with minimal tweaking, once Windows is loaded, will only have two active paths, even with the Windows MPIO component (i.e. Windows builtin multi-pathing software) installed. Actually the number of active paths changed as follows at various stages of the boot and build process:

- Initial boot with iSCSI boot configuration loaded had 4 paths active

- Booted into install disk ready to commence the installation of Windows had 1 path active

- On completion of the installation of Windows 2016, 2 paths are shown as active

- Adding the Cisco Network drivers still only showed 2 paths active

I ran through a number of tests with varying configurations at both the UCS Service Profile and within Windows with varying results. In the end though, I was able to build and configure Windows so that all four paths were active when accessible.

To get to this point there are some considerations to keep in mind when setting your configuration parameters such as:

- Windows only supports a single iSCSI Qualified Name (IQN)

- Once Windows loads, only one configured target interface for each of the UCS Service Profiles iSCSI vNICs boot parameters will be active.

- Within Windows, there is no ability to manipulate the physical iSCSI setup state and as such, you can not activate the additional paths with multi-pathing software (i.e. Windows Component MPIO) alone.

- The physical iSCSI boot settings are reported within the software iSCSI initiator such as devices assigned and sessions established (the active paths) but only as Read Only and as such no settings can be changed or sessions added

- iSCSI Hardware and Software Initiator configurations can be applied to the same Network Interface

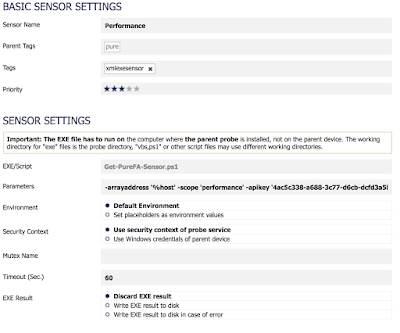

In the end a configuration that was able to ensure four active paths were enabled from the default Windows installation with the following configuration changes:

- Ensuring a single IQN was used within the UCS Service Profile

- Configuring the TCP/IP details for each of the iSCSI Interfaces within Windows as per the configuration settings within the vNICS iSCSI Boot Parameters. This step is required to allow us to add sessions within the iSCSI Software Initiator

- Installing and configuring the Windows Multi-path component MPIO

- Configuring the Windows software iSCSI Initiator to activate all paths by adding new sessions for the missing paths

There are more specifics around some of these step below (specifics around path policy, session authentication, etc for iSCSI are not covered as there is plenty of material available around this and is not part of the problem statement).

UCS IQN Configuration

UCS allows IQNs to be defined at 3 different points within the profile as shown in the diagram below. The ability to configure multiple IQNs is achievable as you can set IQNs at the actual vNIC level within the vNIC settings themselves or within the associated iSCSI boot parameters assigned to each of the vNICs. |

| UCS Service Profile IQN Configuration Points |

If you do not set IQNs then an order of IQN inheritance is applied in the order as shown below.

|

| UCS Service Profile IQN Inheritance Order |

|

| UCS Service Profile Global IQN Configuration Point |

Windows Network Interface Address Details

Even though Windows is able to recognise the address details applied within the UCS Service Profile's vNICS iSCSI Boot Parameters (i.e. running 'ipconfig' within a command prompt will list the address details for each NIC), the software initiator can only apply any settings for network adaptors when the address details are set within Windows. It will actually report the settings as Read Only if you try to manipulate any settings if the address details are not present locally but configured for iSCSI Boot.By default the Interfaces associated within Windows will have the initial DHCP based configuration set as per any new interface. You can change this to reflect the settings as configured within the iSCSI Boot Parameters.

Install and Configure MPIO

Just a quick note to ensure that two required steps are completed for MPIO once installed to ensure that storage device IDs are added into MPIO and that support for iSCSI is enabled. Once this is done a reboot will be required.

Configure iSCSI Initiator

Within the iSCSI Administration tool you are able to note that settings such as the destination target, sessions and accessible devices are reported as expected. What is unable to be done is the addition of additional sessions (paths) until the address details are set in the corresponding network interfaces within Windows. Once this is done though you can then proceed to add the missing sessions do the following steps.

Within the iSCSI Admin Tool validate that the array is listed within the 'Target Portals' in the Discovery Tab. If not add the details of each of the arrays iSCSI interfaces (assuming iSNS services are not present)

Once done switch focus to the 'Targets' tab and select the 'Connect' button

Ensure 'Multipath' selected and click Advanced

You can now create additional sessions to cover the missing paths. In the case of Pure FlashArray the missing paths can be identified at the array by looking at the connection map for the target host.

for each Session you specify the local software initiator, the interface IP and the target array IP and select OK to have it created.

Sessions can then be validated within the iSCSI Admin Tools via the 'Targets' tab <Properties> button.